- Published on

Building a music recognition app in SwiftUI with ShazamKit

At WWDC 2021, Apple, among other things, released the iOS 15 SDK. This SDK contains a new API to communicate between your apps and Shazam’s vast database of song samples. In this tutorial, we’ll build a music recognition app in SwiftUI that will use the microphone of the iOS or iPadOS device and hit the Shazam servers to fetch music meta-data. Sounds fun? Let’s get started

Prerequisite

This tutorial uses the Xcode 13 beta which includes the new ShazamKit framework that is only available from iOS 15 and above.

Creating the project

Open up Xcode 13 and create a new SwiftUI lifecycle app(which should now be the default). Name your app anything you wish and hit create. Copy the app’s bundle ID.

Adding entitlements

Head over to Apple Developer and log in with your developer account. Click on the Identifiers section on the side column. Search for your app’s bundle ID in the list of available bundle IDs. If your app’s bundle ID isn’t available, click on the plus symbol and add a new app. Now, click on App Services and enable ShazamKit for your app. Certificates, Provisioning, and Identifiers

Coding

Now that we’ve set up the entitlements for our app we can start coding. Create a new Swift file called ContentViewModel.swift.

ContentViewModel.swift

This class will be our ViewModel and will be responsible for the whole processing of the audio and will also handle delegate callbacks when available. Create a new class called ContentViewModel. Conform this class to NSObject and ObservableObject.

class ContentViewModel: NSObject, ObservableObject { }

Why NSObject?

Because we’ll have to extend this class later to conform to the ShazamAPI’s delegate. To conform to delegates, we have to make the parent class inherit from NSObject.

Why ObservableObject?

This class will act as a source of truth for our View that we will configure later. Conforming this class to ObservableObject allows our View to subscribe to this class and listen to any changes in certain class variables which will then trigger a re-render of the view with the new data.

Creating a ShazamMedia object

We’ll create a custom object that will contain the data that we’d have extracted from the delegate’s callback. To do this, create a new struct (can be in the same file but outside of the class) called ShazamMedia and include these properties.

struct ShazamMedia: Decodable {

let title: String?

let subtitle: String?

let artistName: String?

let albumArtURL: URL?

let genres: [String]

}

These properties match the types of data that will be provided to us by the delegate which we will discuss in a second.

Back to ContentViewModel

Now that we’ve created the custom object, we can start building out the view model. Start by adding two @Published properties to the class

@Published var shazamMedia = ShazamMedia(

title: “Title…”,

subtitle: “Subtitle…”,

artistName: “Artist Name…”,

albumArtURL: URL(string: “https:google.com”),

genres: [“Pop”]

)

@Published var isRecording = false

A property in a class conforming to ObservableObject that is marked with the @Published property wrapper will cause a view, that’s subscribed to this class, to reload itself with new data. Therefore, anytime the published variables change in value, the view will reload.

Next, create these three properties in the class

private let audioEngine = AVAudioEngine()

private let session = SHSession()

private let signatureGenerator = SHSignatureGenerator()

-

audioEngine: An AVAudioEngine contains a group of connected AVAudioNodes (“nodes”), each of which performs an audio signal generation, processing, or input-output task.

-

session: This is a part of the new ShazamKit framework. SHSession stands for ShazamSession that will be used to perform our audio requests to the server.

-

signatureGenerator: SHSignatureGenerator provides a way to convert audio data into instances of SHSignature which is the required form of data that is required to be sent to the server for processing. Shazam will not process raw audio buffer data. init Since we are conforming ContentViewModel to NSObject, we’ll override the default

initand call super. Also in this init method, we’ll set the delegate of the session object to self.

override init() {

super.init()

session.delegate = self

}

Conforming to SHSessionDelegate

Now that we’ve set the delegate of the session object to self, we have to conform the class to the SHSessionDelegate. Below the class, create an extension to the class that conforms the class to SHSessionDelegate.

extension ContentViewModel: SHSessionDelegate { }

Wiring up the delegates

The SHSessionDelegate has two available and optional methods.

didFindMatchdidNotFindMatchForSignature

These methods work exactly how they sound like (pun intended). For this tutorial, we’ll be using the didFindMatch function to retrieve the music’s metadata once Shazam identifies it.

In the extension, call

func session(_ session: SHSession, didFind match: SHMatch) { }

The method returns us us two parameters: session and match. We’re interested in the match value which contains the actual media items. First, fetch the mediaItems property of the match item.

let mediaItems = match.mediaItems

mediaItems contains a list of values since Shazam can find multiple matches for the same song signature. For this tutorial, we’ll only consider the first item in the list of mediaItems.

if let firstItem = mediaItems.first {

let _shazamMedia = ShazamMedia(

title: firstItem.title,

subtitle: firstItem.subtitle,

artistName: firstItem.artist,

albumArtURL: firstItem.artworkURL,

genres: firstItem.genres

)

DispatchQueue.main.async {

self.shazamMedia = _shazamMedia

}

}

Let’s quickly go through what’s happening here. We’re accessing the first element in the list of mediaItems using if let since fetching the first item in an array returns an optional (array can be empty). Once we have access to the first element, we’re creating a new instance of the custom ShazamMedia object that we had created earlier. Then, we’re setting this new instance’s values to the @Published property of the class. This has to be done on the main thread since, as I mentioned, changing or setting new values to @Published properties makes SwiftUI perform reload on views that were subscribed to the class and all UI changes always have to happen on the main thread. Hence, we set the new value to the @Published property of the class using DispatchQueue.main.async.

Configuring the microphone

Since access to the microphone is a privacy sensitive feature, we have to first add in a new key into the Info.plist file of our project. Add the Privacy - Microphone Usage Description key and set the value to any string that you’d like to show to the user when asking for permission to access their device’s microphone.

Back to ContentViewModel

Create a new method called startOrEndListening() that will be called every time a button (that we’ll configure later in the view) will be tapped. This method will be responsible for setting up the audio engine to start recording, if the engine isn’t already running or to turn off the engine if it’s already running. To add this initial check, type in this code inside of the function.

guard !audioEngine.isRunning else {

audioEngine.stop()

DispatchQueue.main.async {

self.isRecording = false

}

return

}

We’ve created a guard clause that will only proceed into the rest of the method if the audioEngine wasn’t already running. If it was running, this method will turn off the engine, and set the value of the isRecording property (marked with the @Published) to false indicating the microphone isn’t listening and therefore no processing of sound signature is taking place.

Now, paste this code below the end of the guard statement.

let audioSession = AVAudioSession.sharedInstance()

audioSession.requestRecordPermission { granted in

guard granted else { return }

try? audioSession.setActive(true, options: .notifyOthersOnDeactivation)

let inputNode = self.audioEngine.inputNode

let recordingFormat = inputNode.outputFormat(forBus: 0)

inputNode.installTap(onBus: 0,

bufferSize: 1024,

format: recordingFormat) { (buffer: AVAudioPCMBuffer,

when: AVAudioTime) in

self.session.matchStreamingBuffer(buffer, at: nil)

}

self.audioEngine.prepare()

do {

try self.audioEngine.start()

} catch (let error) {

assertionFailure(error.localizedDescription)

}

DispatchQueue.main.async {

self.isRecording = true

}

}

I won’t go too much in-depth explaining this since it’s very self-explanatory but all this function does is set the audioSession to an active state, create an inputNode, and a recording format. Once these are created, there’s a tap installed on the inputNode. The closure returns us a PCMBuffer that we’ll pass into the signatureGenerator and then start matching the session to the buffer.

Outside of this closure, we prepare the audioEngine before starting it. Since starting the audioEngine can throw an error, we wrap it in a do try catch block and handle errors by asserting with the error’s description.

Once the do try catch block completes successfully, we can set the class variable isRecording to true to indicate that the app is listening to audio and continuously matching the buffer with Shazam’s database.

Creating the view.

Head over to ContentView.swift and add in a new property to access the ContentViewModel. Add this line inside the struct but outside of the body property.

@StateObject private var viewModel = ContentViewModel()

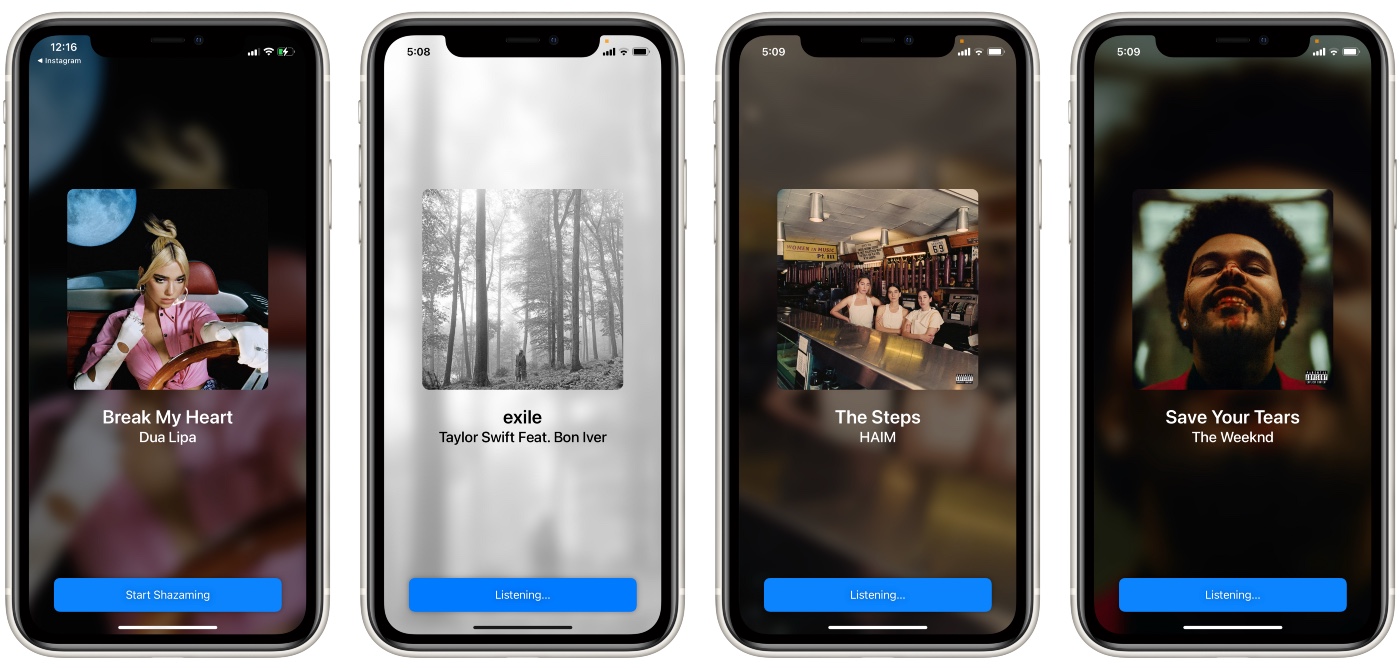

[image:20B4B1D6-4DC4-4CED-B3A4-1421A0E7470C-2486-00000D3D8BCD9B8D/0*EBUDeVL9IgzAodSo.png]

As we can see from the diagram above, we have a ZStack that contains the album art in a full screen, scaledToFit, blurred, and in a reduced opacity mode. On top of the ZStack, we’ll have a VStack with the album art, song name, and artist name. We’ll then add a Button with the new .controlSize(.large) modifier to start or stop listening.

Paste this code inside your view’s body property.

ZStack {

AsyncImage(url: viewModel.shazamMedia.albumArtURL) { image in

image

.resizable()

.scaledToFill()

.blur(radius: 10, opaque: true)

.opacity(0.5)

.edgesIgnoringSafeArea(.all)

} placeholder: {

EmptyView()

}

VStack(alignment: .center) {

Spacer()

AsyncImage(url: viewModel.shazamMedia.albumArtURL) { image in

image

.resizable()

.frame(width: 300, height: 300)

.aspectRatio(contentMode: .fit)

.cornerRadius(10)

} placeholder: {

RoundedRectangle(cornerRadius: 10)

.fill(Color.purple.opacity(0.5))

.frame(width: 300, height: 300)

.cornerRadius(10)

.redacted(reason: .privacy)

}

VStack(alignment: .center) {

Text(viewModel.shazamMedia.title ?? “Title”)

.font(.title)

.fontWeight(.semibold)

.multilineTextAlignment(.center)

Text(viewModel.shazamMedia.artistName ?? “Artist Name”)

.font(.title2)

.fontWeight(.medium)

.multilineTextAlignment(.center)

}.padding()

Spacer()

Button(action: {viewModel.startOrEndListening()}) {

Text(viewModel.isRecording ? “Listening…” : “Start Shazaming”)

.frame(width: 300)

}.buttonStyle(.bordered)

.controlSize(.large)

.controlProminence(.increased)

.shadow(radius: 4)

}

}

To load the images, we’ll use the brand new AsyncImage feature of SwiftUI that’s available from iOS 15. The Button’s action is to execute the startOrEndListening method of the ContentViewModel class (this view’s source of truth).

Conclusion

Simply run the app on your device running iOS 15, iPadOS 15, or macOS 12 and up, tap on the Start listening button, and experience the power of Shazam. That’s the end of this tutorial. You can find the code. Thank you for reading and have a nice rest of the day night. here